AI-generated music is Russian roulette for copyright law

Sometimes AI models "memorize" their datasets and commit copyright infringement.

Over the last few months, the Internet has filled up with visual artwork generated by AI models like Stable Diffusion. These models are typically trained on large collections of images crawled from the Internet—without asking for permission from those images’ owners.

The AI is capable of imitating specific artists—like Greg Rutkowski. He disapproves of his art being included in AI training datasets without his consent, and then used to generate volumes of art in his style.

Some artists are hoping they can sue AI creators or users for copyright infringement. However, it is typically impossible to sue somebody for infringement if all they copied was your style1. And usually, style is the only thing copied by AI art.

But AI art is in significant danger from copyright law:

In rare cases, AI art models memorize copyrighted works—and repeat them verbatim.

Whenever memorization happens, there is some risk that users of AI art are infringing on memorized works.

Copyright law is tougher for music—making it especially risky to generate music with AI.

What is a derivative work?

If you take a beautiful photograph, you own the copyright to it. However, if I edit your photo, I have created a derivative work of your original work.

If I want to distribute my derivative work, I need your permission and to obey any restrictions you set—unless an exception like Fair Use applies2.

If my photo differs substantially from yours, it is more likely to be a transformative work—where permission from the original work’s copyright owner is not required.

The AI memorization problem

Let’s pretend that you and I want to create an image-generating AI model—like Stable Diffusion.

First, we collect a training dataset of millions of images that we want to imitate. For the moment, we won’t worry about if the images are copyrighted. Because of legal precedents like Authors Guild v. Google, the tech industry assumes that AI models are transformative works—and not derivative works of the training dataset3.

Next, we “train” our AI model until it learns to generate new images like the ones in our training dataset. The model itself doesn’t contain any images, but the training process teaches it high-level concepts about how to draw new images.

When we are done training, people can provide prompts to our model to generate images based on the high-level concepts learned during training.

But if we trained our model poorly, it might memorize some training images and spit them out when generating new images.

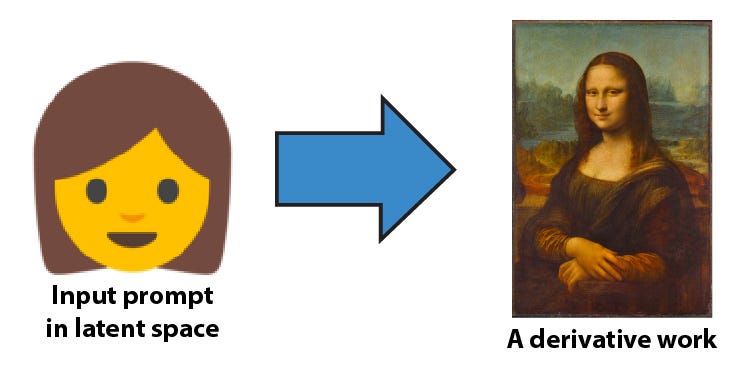

In the below example, we didn’t want our model to spit out the Mona Lisa we trained on. Instead, we were hoping for a new image.

The dark copyright implications of memorization

What happens if our model memorized copyrighted training images?

Then, any generated images could be derivative works. In which case, we would need permission to use those generated images—or risk committing copyright infringement.

Memorization creates lopsided legal risk for us:

Easy to prove guilt. Anybody can identify that your AI-generated image is derived from somebody else’s image.

Impossible to prove innocence. There is no way to verify that a sufficiently large AI model has never memorized any training data.

AIs never confess. The images you generate could be derivative works—and you wouldn’t know it. The AI won’t warn you if you’re about to commit copyright infringement.

Memorization in Stable Diffusion

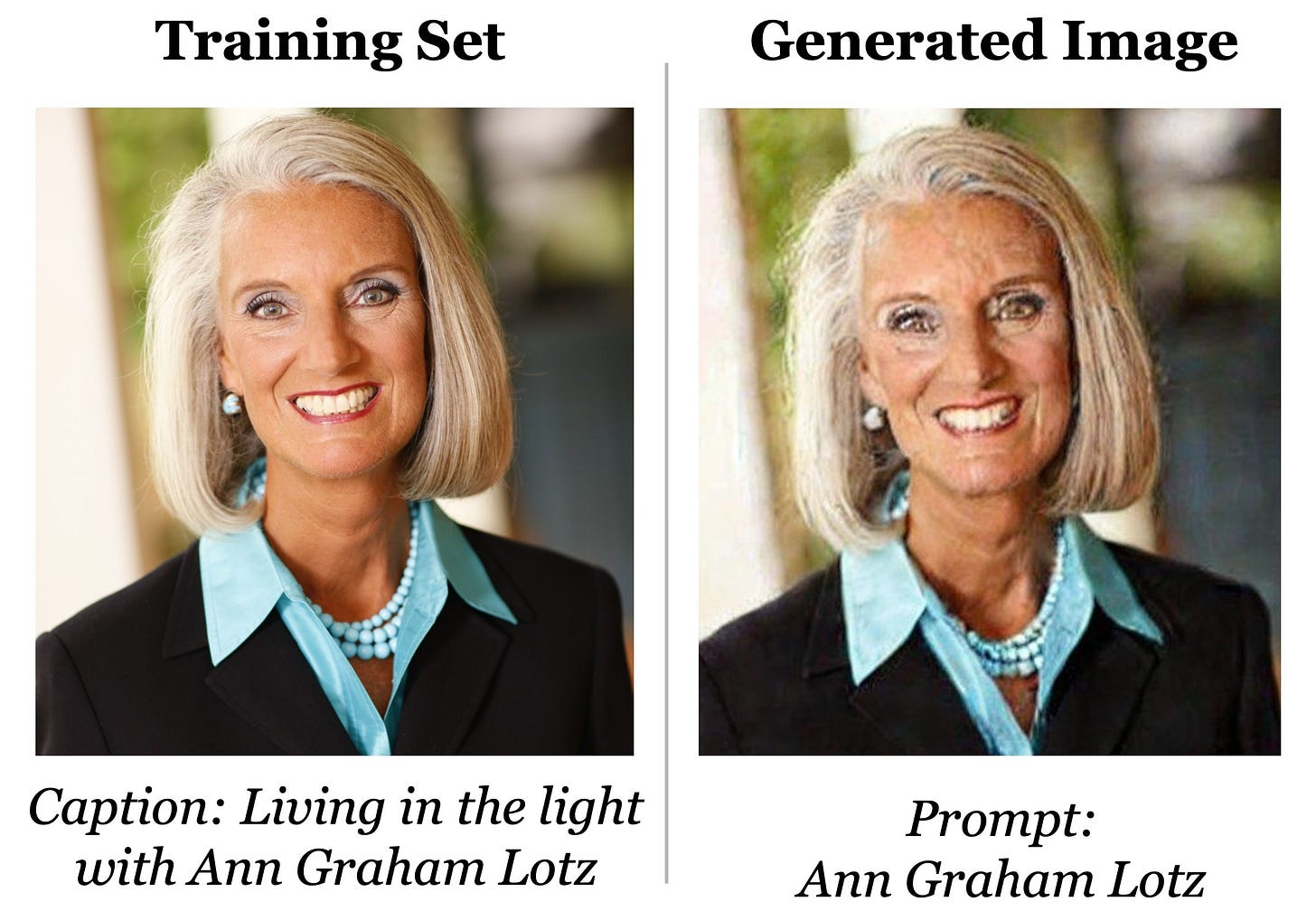

Recently, Nicholas Carlini and a team of researchers generated 175 million images using Stable Diffusion—finding 109 images that indicate memorization. Below is one of the images they found:

Carlini and his team have proven that Stable Diffusion can generate derivative works. Therefore, it is possible (but unlikely) that a user could unknowingly generate an infringing derivative work.

The lawsuits start flying

Meanwhile, Matthew Butterick—a Harvard-educated designer, software engineer, and lawyer—has been leading his own investigation.

Last month, Butterick and a team of lawyers filed a class action lawsuit against three companies using Stable Diffusion.

He argues that Stable Diffusion partially memorizes training data by design. As a result, the generated images are derivative works of the training dataset4.

This lawsuit is Butterick’s second foray into AI law. Last November, he and his team filed a class-action lawsuit against the developer tools company GitHub over Copilot—their AI that writes computer programs. Copilot may have a memorization issue as well. Engineers have raised concerns that Copilot will generate code that is nearly identical to existing copyrighted code.

What does memorization look like for music?

I have previously written about music-generating AI models—like OpenAI Jukebox and TensorFlow Magenta. Since then, a handful of newer models like Riffusion have launched.

But generating music is more complicated than images—both from a technical and a legal perspective.

Unlike an image, a song has two copyrights: one for the sound recording and another for the music composition.

And it’s frighteningly easy to infringe upon either one. Some example lawsuits:

Sound recording. The rap group N.W.A. lost a copyright infringement suit because they sampled a distorted two-second-long guitar chord from Funkadelic’s “Get Off Your Ass and Jam” without asking for permission.

Composition. Robin Thicke and Pharrell had to pay $5.3 million in damages to Marvin Gaye’s family because “Blurred Lines”’s composition vaguely resembles Gaye’s “Got to Give It Up.”

Now imagine if we trained an AI to generate recorded music. It is possible for the AI to memorize sound recordings, compositions, or both.

This creates two ways for you to get sued:

Your AI could generate a new song that contains two seconds of distorted audio from the training set.

Marvin Gaye’s family could insist that your AI’s songwriting style is just like his.

If we were generating something else—like text—we wouldn’t have to worry about memorization as much. For example, if you are writing a book review, you can quote short snippets from the book and typically have it considered as transformative use.

But the laws and precedents in music are extra harsh. As a result, it is unlikely that any amount of memorization would be considered Fair Use—although this is a question that needs to be tested in court.

Is there a future for AI music?

There are relatively few legal or economic barriers to building an AI startup that generates text, images, or software.

However, AI music-generation businesses face a uniquely dismal outlook:

Aggressive rightsholders. Universal, Sony, and Warner own the three largest record labels and music publishers. They have an enormous war chest to finance infringement lawsuits against AI art.

Lack of access to training data. Universal, Sony, and Warner may also be able to use their influence to prevent other businesses from training models on their music without their permission. Similarly, in the image world, Getty Images is suing Stability AI for allegedly scraping millions of images without permission.

Automated copyright detection. Systems like YouTube Content ID and Pex will become good enough to hunt down snippets of memorized audio in AI-generated songs. People who upload memorized audio risk DMCA takedowns and infringement lawsuits.

The safest way to generate AI music is to avoid using other people’s copyrighted data without permission:

Use public domain music. Sound recordings made before 1923 are no longer protected by US copyright law.

Use your own music. The musician Holly Herndon used recordings of her own voice as the training dataset for Spawn—Holly’s AI-powered vocalist.

Ask for permission. Alternatively, Creative Commons-licensed music may allow for creating derivative works. However, these licenses often come with restrictions on attribution and commercial use.

Further reading

The current legal cases against generative AI are just the beginning by Kyle Wiggers for TechCrunch

Why music AI companies are not flirting with copyright infringement by Yung Spielburg for Water & Music

By the way, I’m looking for a new job as a software engineer. I have ten years of experience—mostly in Python, Rust, and JavaScript. You can read more about my background at jamesmishra.com. You can email me at j@jamesmishra.com.

US copyright law does not protect an artist’s style. However, depending on the situation, it is possible that another area of US law—like trademarks, patents, or consumer protection—applies. I don’t know. I’m not a lawyer.

The United States Copyright Office’s Circular 14 states that “Only the owner of copyright in a work has the right to prepare, or to authorize someone else to create, an adaptation of that work.” This assumes that the use of the derivative work does not fall under Fair Use or another exception.

The Authors Guild v. Google case is from back when Google first launched Google Books, where they:

scanned printed books.

converted the scanned pages into text using optical character recognition (OCR) software.

built a Google-style search engine on top of the text.

Google did all this without asking for permission from book publishers. The Authors’ Guild sued—being particularly concerned that Google Books showed short snippets of books in search. However, the courts ruled in Google’s favor—declaring the Google Books search index to be a transformative work.

Since this case, tech companies have generally assumed building other types of indexes and models will also be considered transformative use. However, this assumption may be wrong for generative AI models that create new works capable of competing against the original works in the training dataset.

The lawsuit’s webpage doesn’t mention the word memorization—using the word compression instead. In AI, the word compression describes how detailed input data is turned into lightweight vectors representing higher-level concepts. This is different from:

lossless compression: where you can shrink a file by putting it in a ZIP container and then extract the identical file again.

lossy compression: where you can shrink a WAV file by converting it into an MP3 file. The MP3 file sounds almost exactly like the WAV file, but the MP3 is smaller because the conversion process discarded data that was inaudible to the human ear.

Butterick argues that Stable Diffusion’s neural network encoding/compression system is philosophically equivalent to lossy file compression. From a copyright law perspective, an MP3 file created from a WAV file is not a transformative work. Therefore, Butterick argues, neither should Stable Diffusion-generated images be considered transformative works.

There is some strong machine learning philosophy behind his argument, but there still are some unanswered questions. If Butterick’s legal argument can be taken as technical truth, then we would expect Nicholas Carlini’s team to have found more instances of memorization in Stable Diffusion.

Carlini’s team only found 109 memorized images out of 175 million generated images—which suggests that Stable Diffusion usually does learn high-level concepts, and that compression is not memorization in this case.

Ultimately, the US court system must decide what is the acceptable amount of memorization.